Current state of AI assistants

voice assistants for the private oriented

1528 Words

2025-03-29

With the rise of ChatGPT and thelike, chat systems become more relevant for the usage in privacy concerned settings. Or one generally does not want to give all information about data put into such systems into a central place.

For this, one typically does not need a lot of RAM and CPU, but plain GPU power. As discussed in the post about GPU forwarding in Proxmox, I am using a Nvidia RTX 6000 with 24 GB VRAM for some AI experiments.

This post first introduces some basics, introduces OpenWebUI, discusses which models are currently useful and describes an integration of stable diffusion in the system. Finally concluding how this can be used.

A good introduction is also available in this Talk 1.

Basics

First of all, I want to emphasize that the usage of AI models as of now (March 2025) is always just a prediction model of the next tokens/words in a non-linear solution space. As some things might sound like thinking and processing, it actually still is a very smart prediction model of next tokens.

This is especially true when multiple things are coupled - for example the usage of image generation, prompting, searching the web and code execution. Of course, this allows to give context and let the AI decide to use one of the available tools to search the web or execute python on its own, which can improve answers a lot. By now, we all have made first experience with LLM/AI and how they can be awfully wrong, biased or completely trying to keep going the wrong way.

Some definitions at first:

- Model: a model is a snapshot of a pre-trained AI architecture. Retraining a specific model without changing the architecture does not increase the size, but simply changes the weights (so the values) somewhere in the model.

- Vector database: a vector database includes documents which are considered relevant. It is either used for structuring the training dataset or create additional context to a pretrained model. Such a database is not required and generally most of the AI models work fine without and have all their “knowledge” stored in the weights. This is also known as Retrieval-augmented generation (RAG).

- Tools: when activated, the LLM can have tools available, letting it query the web for additional information. The code execution tool works completely in browser using pyodide, an in-browser Python interpreter written in WebAssembly.

With OpenWebUI one can host a web interface like ChatGPT quite easily.

The important part is, that the available model and the model weights are publically available for the environment described in the following. We can therefore run this completely offline.

Chat with gemma3 describing a picture. I first have to inform it about its capabilities.

LLM with OpenWebUI

OpenWebUI makes it possible to interact with models through a web-based User Interface. This is nothing new, but it also enables features like

- Optical Character Recognition (OCR) on PDFs

- analyzing of PDFs by importance

- Code interpreter using

pyodide - Image generation using stable diffusion

- Web-Search using Duckduckgo API or any other search API

- Text-to-Speech (TTS) and Speech-to-Text (STT) using whisper.

Note, that none of the freely available LLMs support audio input or output directly. This is especially different to the OpenAi GPT-4o, which is said to support video and audio input as well as audio output and sets a different tone of output as well 2.

Discussion about existing models

| Model | size | use-case |

|---|---|---|

| llava:7b | 6.2GB | vision and text |

| llama3:8b | 4.4GB | text only |

| gemma3:27b | 17GB | text and partially vision |

| deepseek-r1:32b | 19GB | reasoning text |

| deepseek-r1:1.5b | 1.1GB | reasoning, quite bad performance |

| qwen2.5-coder:7b | 4.4GB | usage for continue dev coding |

Integration of Stable Diffusion image generation

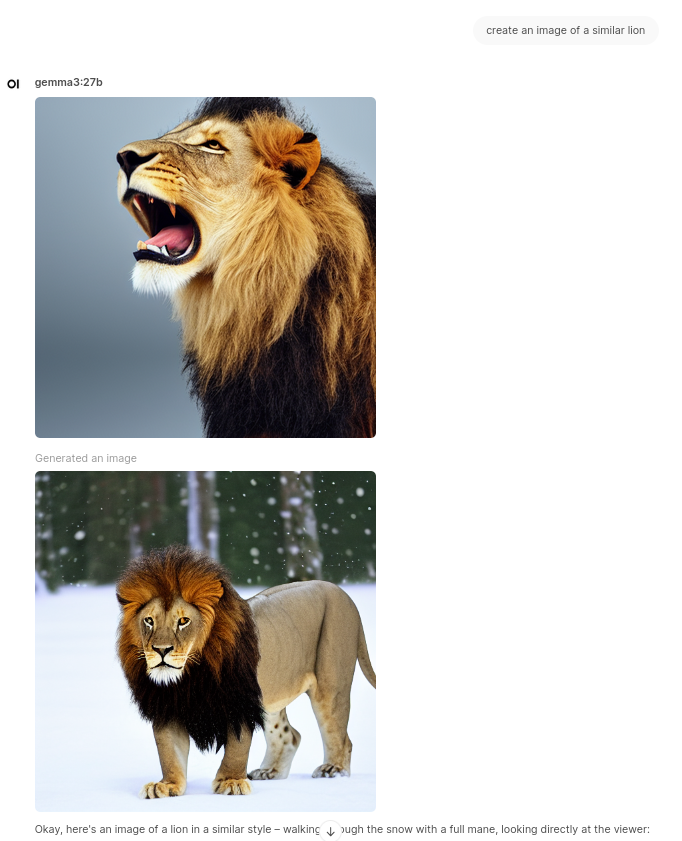

As said before, the language models are only good at their thing. Not at the interaction with images. Therefore, one can integrate the usage of stable-diffusion into OpenWebUI by providing an endpoint in the settings. Here I am using a dockerized version as well, which is based on the work done by AUTOMATIC1111.

Unfortunately, this only supports Stable-Diffusion 1.5, while the newer models require changes due to the adjusted architecture. The generated images are on topic and look okay, though I expected it to be a little better.

Generally, there is a whole industry on improving the prompt generation for image generators. One typically does not directly prompt to stable-diffusion, but first prompt an LLM to tell a good prompt for your specific needs. This is also supported by OpenWebUI and brings the shown results:

Generated Lion using Stable Diffusion 1.5 directly from the OpenWebUI integration. The style of the prior images has been adapted mostly.

An alternative to AUTOMATIC1111 is ComfyUI, which I have not deployed yet (and of course Gemini or OpenAI can be used as well as service). The weights for the most recent stable-diffusion release are available after providing some contact information at https://huggingface.co/stabilityai/stable-diffusion-3.5-medium

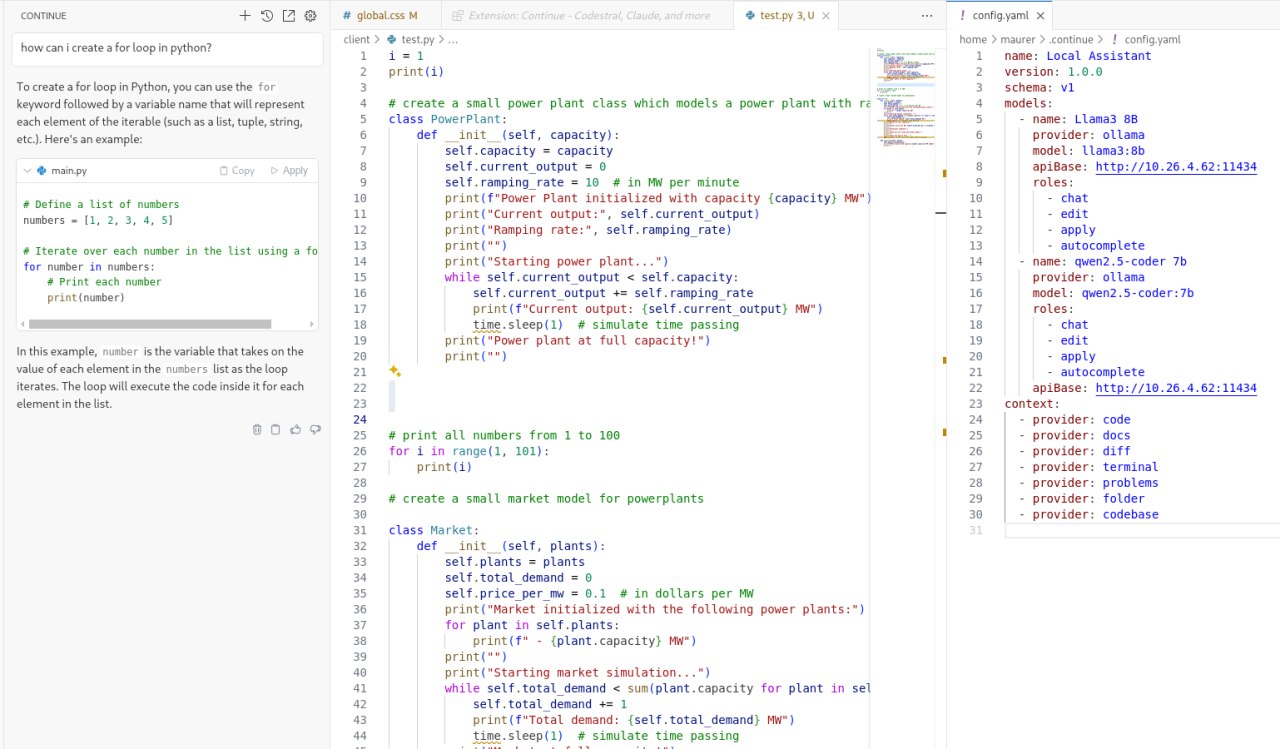

Integration of Ollama models into source code editor using continue.dev

For the integration of a local coding agent, the vscodium extension of continue.dev is a great start. The extension allows to set a local ollama server URL as a host.

The following images shows a chat window on the left, code generated in the middle using the autocompletion feature and the required configuration for this on the right hand side of the window.

Here, the coder models are better trained for the completion task, while general LLMs do not behave that well with the autocompletion. The extension allows to set different models for different tasks and works completely without login.

Usage of Continue.dev in combination with remote Ollama server and qwen2.5-coder:7b model

Services file

There are three services running for my demonstration:

- ollama - hosts the models and provides an API on port 11434, it has access to the GPU

- open-webui - provides the interface to interact with ollama as well as additional features on port 3000

- stable-diffusion-webui - hosts the image generation model, has access to the GPU as well and exposes the web UI and API on port 8080

The first two services are described here 3. While the stable-diffusion-webui was originally done by AUTOMATIC1111 and is available as single image in 4

Docker Compose Services file

services:

ollama:

image: ollama/ollama:${OLLAMA_DOCKER_TAG-latest}

container_name: ollama

volumes:

- ./ollama:/root/.ollama

ports:

- "11434:11434/tcp"

restart: unless-stopped

tty: true

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1 # alternatively, use `count: all` for all GPUs

capabilities: [gpu]

open-webui:

image: ghcr.io/open-webui/open-webui:cuda

container_name: open-webui

volumes:

- ./open-webui:/app/backend/data

depends_on:

- ollama

ports:

- ${OPEN_WEBUI_PORT-3000}:8080

environment:

- 'OLLAMA_BASE_URL=http://ollama:11434'

- 'WEBUI_SECRET_KEY='

restart: unless-stopped

stable-diffusion-webui:

image: universonic/stable-diffusion-webui:minimal

command: --api --no-half --no-half-vae --precision full

runtime: nvidia

container_name: stable-diffusion

restart: unless-stopped

ports:

- "8080:8080/tcp"

volumes:

- ./stablediffusion/inputs:/app/stable-diffusion-webui/inputs

- ./stablediffusion/textual_inversion_templates:/app/stable-diffusion-webui/textual_inversion_templates

- ./stablediffusion/embeddings:/app/stable-diffusion-webui/embeddings

- ./stablediffusion/extensions:/app/stable-diffusion-webui/extensions

- ./stablediffusion/models:/app/stable-diffusion-webui/models

- ./stablediffusion/localizations:/app/stable-diffusion-webui/localizations

- ./stablediffusion/outputs:/app/stable-diffusion-webui/outputs

cap_drop:

- ALL

cap_add:

- NET_BIND_SERVICE

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1 # alternatively, use `count: all` for all GPUs

capabilities: [gpu]

The images are all available from here: https://ollama.com/library/deepseek-r1:32b and are automatically downloaded into the models folder.

And here https://huggingface.co/stabilityai/ for stable-diffusion.

Conclusion

There are also possibilities to create models which interact with other models manually using a toolkit like Agno. Typically, this also makes it possible to integrate hosted LLM/GPTs like ChatGPT, Mistral or Deepseek as well.

Further TODOs

Further TODOs on this topic for me are:

- testing of usage with vector database

- I like postgreSQL, which can be used with the pgVector extension

- https://docs.phidata.com/reference/vectordb/pgvector

- https://dev.to/stephenc222/how-to-use-postgresql-to-store-and-query-vector-embeddings-h4b

- usage of agent toolkits like Agno

- integration of other picture generators like stable-diffusion-3.5 using comfyUI

- see how specialized context, for example about energy market simulations can be added

Performance

The performance on the RTX6000 is quite good - token generation ist quite good so that one can read along the generated text for large models, while it is faster for smaller ones. Of course, the reasoning models take quite a long time - sometimes 30s - to think about something, and tend to overthink everything. So for small checks, the usage of gemma3 - which is a non-reasoning model - is very good and direct.

It uses its full TDP of 260W during generation of text or images, and idles around 10-80W when not active prompting. Startup time of the models can be anywhere between 5s to 60s depending on the model.

On one hand it is very exciting and interesting how far you can get with a bunch of open-source tools, while it is on the other hand impressive that there is nothing similar to the GPT-4o experience of fluently integrating voice, video and audio feedback as well as images directly.

Before GPT-4o, OpenAI used a smiliar workflow

To achieve this, Voice Mode is a pipeline of three separate models: one simple model transcribes audio to text, GPT‑3.5 or GPT‑4 takes in text and outputs text, and a third simple model converts that text back to audio. This process means that the main source of intelligence, GPT‑4, loses a lot of information—it can’t directly observe tone, multiple speakers, or background noises, and it can’t output laughter, singing, or express emotion. 2

Similar advances are somewhat possible with multimodal agents, but this is not yet available in OpenWebUI and similar tools. We will see what the future brings on this.